There are three types of shaders in common use (pixel, vertex, and geometry shaders), with several more recently added. In general, this is a large pixel matrix or " frame buffer". The graphic pipeline uses these steps in order to transform three-dimensional (or two-dimensional) data into useful two-dimensional data for displaying.

SHADER MODEL 3.0 FIX SERIES

Each vertex is then rendered as a series of pixels onto a surface (block of memory) that will eventually be sent to the screen. A vertex shader is called for each vertex in a primitive (possibly after tessellation) thus one vertex in, one (updated) vertex out. Vertex shaders describe the attributes (position, texture coordinates, colors, etc.) of a vertex, while pixel shaders describe the traits (color, z-depth and alpha value) of a pixel. Shaders are simple programs that describe the traits of either a vertex or a pixel. Eventually, graphics hardware evolved toward a unified shader model. Geometry shaders were introduced with Direct3D 10 and OpenGL 3.2. The first video card with a programmable pixel shader was the Nvidia GeForce 3 (NV20), released in 2001. The first shader-capable GPUs only supported pixel shading, but vertex shaders were quickly introduced once developers realized the power of shaders.

SHADER MODEL 3.0 FIX SOFTWARE

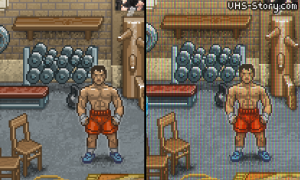

Īs graphics processing units evolved, major graphics software libraries such as OpenGL and Direct3D began to support shaders. This use of the term "shader" was introduced to the public by Pixar with version 3.0 of their RenderMan Interface Specification, originally published in May 1988. Beyond simple lighting models, more complex uses of shaders include: altering the hue, saturation, brightness ( HSL/HSV) or contrast of an image producing blur, light bloom, volumetric lighting, normal mapping (for depth effects), bokeh, cel shading, posterization, bump mapping, distortion, chroma keying (for so-called "bluescreen/ greenscreen" effects), edge and motion detection, as well as psychedelic effects such as those seen in the demoscene. Shaders are used widely in cinema post-processing, computer-generated imagery, and video games to produce a range of effects. The position and color ( hue, saturation, brightness, and contrast) of all pixels, vertices, and/or textures used to construct a final rendered image can be altered using algorithms defined in a shader, and can be modified by external variables or textures introduced by the computer program calling the shader. Shading languages are used to program the GPU's rendering pipeline, which has mostly superseded the fixed-function pipeline of the past that only allowed for common geometry transforming and pixel-shading functions with shaders, customized effects can be used. Most shaders are coded for (and run on) a graphics processing unit (GPU), though this is not a strict requirement. Traditional shaders calculate rendering effects on graphics hardware with a high degree of flexibility. They now perform a variety of specialized functions in various fields within the category of computer graphics special effects, or else do video post-processing unrelated to shading, or even perform functions unrelated to graphics. In computer graphics, a shader is a type of computer program originally used for shading in 3D scenes (the production of appropriate levels of light, darkness, and color in a rendered image). This shader works by replacing all light areas of the image with white, and all dark areas with a brightly colored texture. The unaltered, unshaded image is on the left, and the same image has a shader applied on the right. Another use of shaders is for special effects, even on 2D images, e.g.

0 kommentar(er)

0 kommentar(er)